Six Web Performance Technologies to Watch in 2020

Introduction

Reading the technical press you would be forgiven for thinking that 2020 is going to be a great year for web performance. Repeatedly touted are the blazing fast speeds we will achieve with 5G, the fundamental improvements that HTTP/3 will bring and how new devices will be faster than premium laptops.

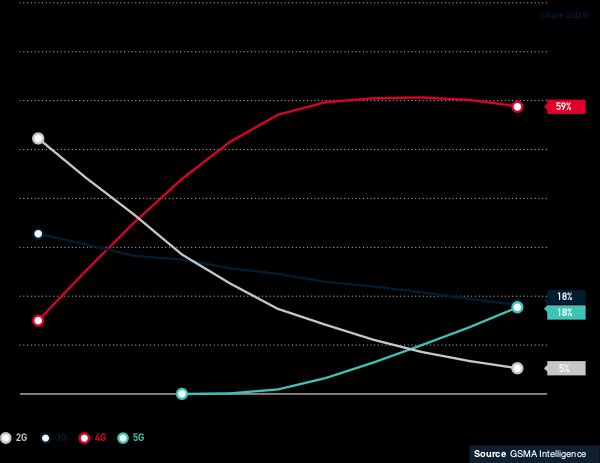

I fear this outlook could make us lackadaisical about performance. 5G isn’t expected to reach even 20% of subscribers by 2025, HTTP/3 doesn’t change the speed of light nor the size of our JavaScript bundles, and ultra-fast devices will only reach the hands of the affluent few. In this article I'll look at some of the technologies which I believe could have a significant impact on web performance in 2020.

You can jump straight ahead to the sections on:

- JAMstack

- Web Assembly

- Edge Compute

- Observability

- Browser Platform Improvements

- Web Monetisation

- Honourable Mentions

Tl;dr: there will be no quick fixes. If we want a fast web in 2020 we have to take action now.

5G will deploy slowly and will never replace 4G, that’s not the point of it. I believe we will see better traction with 5G home broadband than mobile connections. Not least because handling multiple gigabits per second of data will extract more juice from your battery than the technology of 2020 allows.

HTTP/3 will hopefully fix the flawed implementations of stream prioritisation in HTTP/2. The implementation of the protocol is fundamentally different from H/2 (and H/1.x for that matter) but it still works within the same constraints. It will not rely on TCP’s implementation of congestion control - but we do still control, so it will be handled at the application layer instead. H/2 was hailed as a breakthrough for performance over H/1, until it was widely launched. For many sites it made little difference, for some it made a positive improvement and for some it made things worse. H/3 will likely show a similar outcome, with the actual gains defined by the architecture of individual sites.

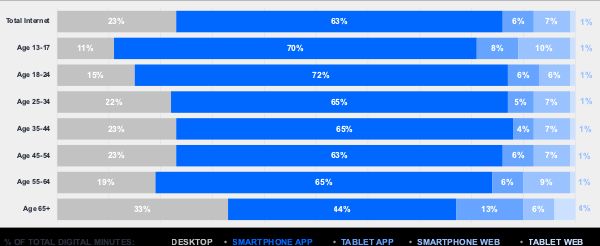

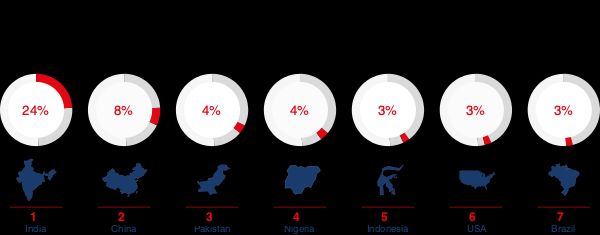

Both of these technologies, 5G and H/3, sit at the network level - how the pipes are built and how to best get information across them. But in 2019 JavaScript has the single biggest impact on user experience. Shipping a 1MB JS application bundle to a mid-range Android phone 500ms faster will do little to help the time it takes to process the code. I say mid-range Android phone because that is the median device that web users have, Apple’s market share in terms of 2019 Q1 sales in the US is 39%, with Samsung at 28%, while the rest is a range of Android devices which sell for between $500 and $50 dollars.

We know that the average American changes their phone every 32 months and worldwide the combined market share of premium brands Apple and Samsung is just 33%. The majority of users world-wide are on low- to mid-range Android phones, these consumers are far more likely to use a native app vs. the mobile web with perceived performance and data usage limits being the primary factors. The web is an open and democratic place, when people are forced to use only native apps they have a limited window on the web experience and the knowledge available therein.

There has been a fundamental shift over the past half-decade on the web: from thick servers and thin clients to thin servers and thick clients. The inexorable rise of frameworks such as Angular, React, Vue and their many cousins has been led by an assumption that managing state in the browser is quicker than a request to a server. This assumption, I can only assume, is made by developers who have flagship mobile devices or primarily work on desktop devices. We now find ourselves in a position where every website might bring with it a whole application framework, perhaps with hacks like server-side rendering and client-side rehydration to appease SEO crawlers. While this trend is unlikely to change course in 2020, we may see better web platform features to account for it.

There are a few exciting technologies which I think will be a positive change for web performance in 2020:

1 - JAMstack

The JAM (JavaScript APIs Markup) stack is an architecture for delivering fast sites, using static site generators. SSGs such as Jekyll, Hugo and 11ty allow for a pleasant developer experience and rapid iteration, but compile to HTML - the native language of the web. These generators are currently widely used for personal blogs or small company sites due to their small footprint. Moves towards headless CMS solutions, client-side payment APIs and cloud deployment could make SSGs viable for anything from news websites to ecommerce sites, effectively removing server processing time as a critical factor in performance and simplifying the process of deployment.

Netlify has emerged as the market leader in workflow for the JAMstack. Netlify manages the whole workflow from development through to CDN deployment and augments the static site with a number of dynamic features. These features, such as identity management, forms and edge functions, broaden the scope of potential for JAMstack. There are also some great developer-friendly features such as deploying branches to unique URLs for testing, so there is no more need for a staging network. While Netlify is the clear leader in this space in 2019, I expect multiple solutions to appear in 2020 and build a competitive marketplace for enterprise JAMstack deployments. This has the potential to significantly erode the Wordpress marketplace, which currently accounts for 34% of the web!

Who to follow: @PhilHawksworth & @shortdiv.

2 - WASM (Web Assembly)

WASM has come a long way in the past year and 2020 might see broader adoption. WASM compiles in-browser 20 times faster than JavaScript, and WASM scripts will shortly be importable as modules into JS projects. The exciting possibility here is that the most processor intensive modules of popular frameworks, such as React’s Virtual DOM, can be re-written in a performant language such as Rust and compiled into WASM. In this example a single React release could raise the tide of web performance for all React-based websites. Until then, WASM provides a method to implement compute-intensive tasks in a language that performs much better than JavaScript (for applicable tasks).

Who to follow: @PatrickHamann & @_JayPhelps.

3 - Edge Compute

Code executing at the CDN level allows us to offload processing from both client and server into the CDN platform. An existing implementation of WASM (or at least similar) is available on Fastly’s edge compute platform. All major CDNs have now launched some form of edge compute service (see AWS' Lambda, CloudFlare Workers, Akamai EdgeWorkers), interestingly all are built totally differently! Compute at the edge allows us to offload logic from both client and server, moving legacy server logic closer to the user, and intensive logic away to more controlled compute resources. Great applications of this are personalisation and image manipulation, both are tough to implement on the client but work well in server environments.

4 - Observability

As the saying goes: "if you can't measure it, you can't manage it". 2019 has seen some significant improvements in observability: we have been moving away from purely objective measures (such as page load time) for a while now but this year we are seeing more visibility into how browsers process and render content. Metrics such as Cumulative Layout Shift, Total Blocking Time and Largest Contentful Paint aim to get closer to user experience measures. These simplify the process of measuring speed and put less dependence on developers to instrument their applications. HTTP Archive and Chome Real User Experience report (CrUX) both provide a great comparative database of performance statistics, and Lighthouse CI provides an integration point to take all of this data and add good performance testing to build processes.

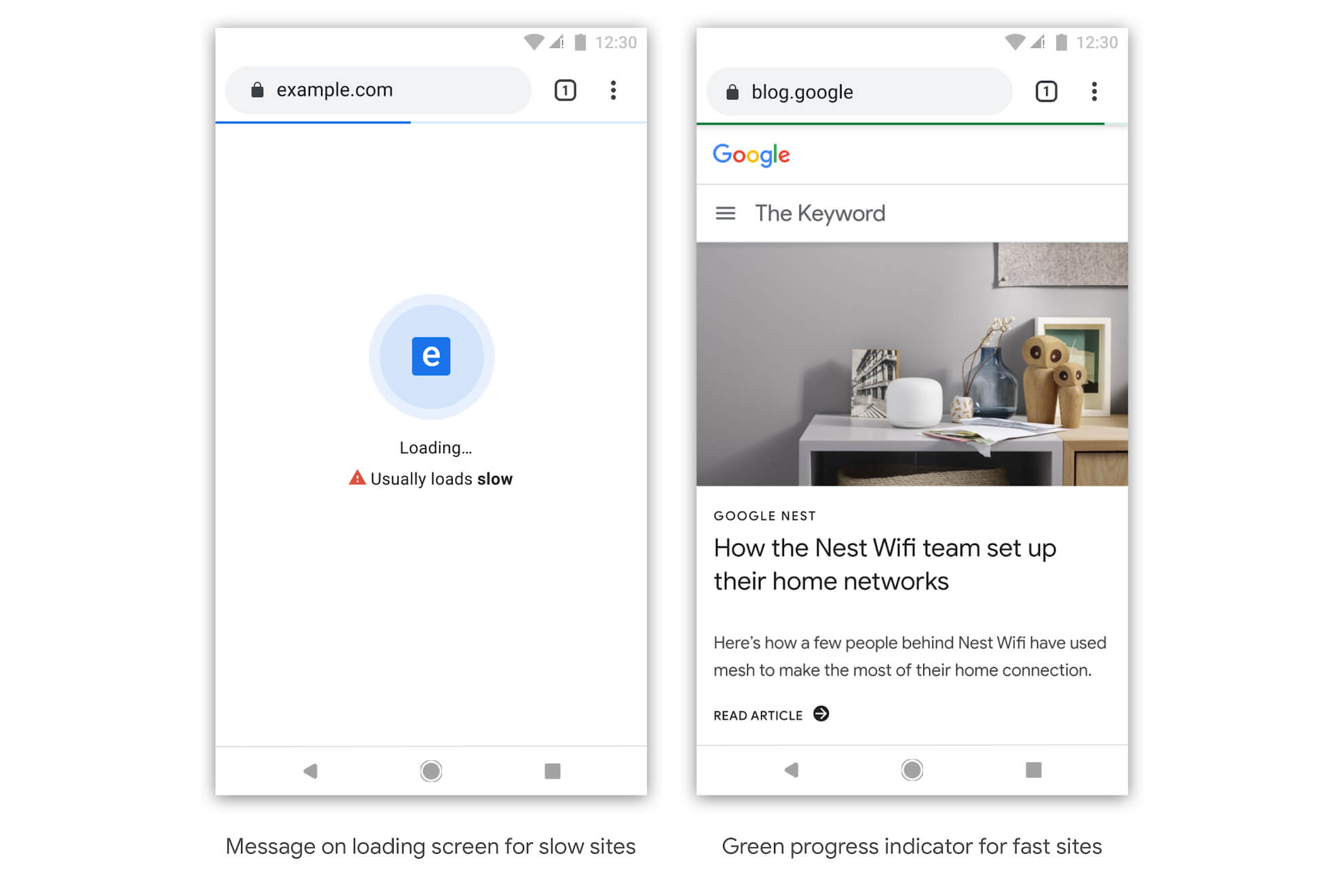

Google has proposed a "slow" badge in search results for websites. The stated goal is noble: "moving towards a faster web", but time and time again we have seen that the 'performance police' approach fails to affect meaningful change. Who knows what the workaround techniques will be: loading screens like 1999, screenshots rendered while the page loads in the background, UA sniffing to deliver a faster experience to googlebot.

As a corollary to that statement: performance metrics for the slowest one-third of traffic saw improvements of up to 20% when Google included page speed as a ranking factor in mobile search results. So it seems that imposing these perceived penalties does work, perhaps because technical SEO gets more budget than web performance?

For the slowest one-third of traffic, we saw user-centric performance metrics improve by 15% to 20% in 2018. As a comparison, no improvement was seen in 2017.

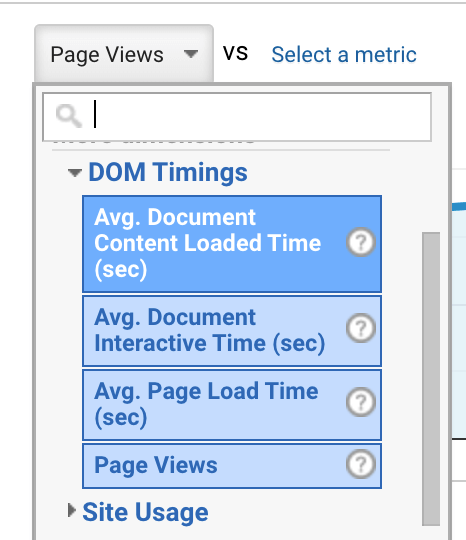

This approach of stick vs. carrot is a bit of a poke in the eye, especially considering that a huge hole in performance observability comes from Google Analytics. GA is the most widely used third-party tag on the planet, collecting data from the majority of the sites you visit around the web. GA provides 'Site Speed' reports, which should be a good thing, yet out of the box GA only supports the most basic of legacy performance measures. These are randomly sampled from 1% of pageviews with no ability to correlate performance with business outcomes. Worse still, the only aggregate statistic available is the arithmetic mean, which is known to be nearly useless in web performance measures.

There is still a long way to go to improve observability. As an industry we could instantly improve observability for at least thirty million websites by simply improving the default performance data presented in Google Analytics.

It is critical to measure site speed using a Real User Measurement solution, otherwise you have zero visibility of how your users perceive the performance of your application (or worse, metrics that point you in the wrong direction). For the most simple setup, go for SpeedCurve Lux. For the most flexibility and an enterprise solution, go for Akamai mPulse. If you already have an Application Performance Measurement (APM) solution they may have a plugin to measure real user performance, such as Dynatrace UEM and New Relic Browser. Whatever solution you choose, make sure that you can measure what is important to your users and your product, and correlate speed with business success.

I can only hope that the web will get faster as metrics improve, RUM becomes more prevalent and performance statistics become part of executive reports. Slow sites cost money, and observability helps us to allocate funds to improve performance.

Who to follow: @anniesullie & @eanakashima.

5 - Platform improvements

A lot of improvements are coming from browsers like Chrome. It has offered Data Saver mode since 2013, which has very high opt-in rates for low-end devices. This mode in most cases presents a hint to web servers to deliver a lighter page (which could be handled at the edge to deliver a fast, light experience). Chrome also released the loading attribute in 2019 - a hint as to the loading priority of resources. Combining these two, Chrome will by default lazy load offscreen images for users on poor connection or if data saver is enabled. This simple change to the web platform should have a significant impact on web performance, without the intervention of web developers. While this may feel intrusive, browsers have long enforced certain policies for reasons of privacy. Adding performance as a signal in these decisions should mean a more equitable web for all users.

Here's hoping that these changes driven by the Chrome team are standardised and make it to other browsers. This simple change could have a drastic effect on user experiences for those on the worst network connections and those with limited or metered data connections.

Who to follow: @KatieHempenius & @AddyOsmani.

6 - Web monetisation

Much of the slowness of the web comes from our attempts to monetise visitors. From advertising and tracking to personalisation and live chat, these technologies all get in the way of the user experience in order to generate revenue. The business model on the web is not as clear cut as it is on the high street or in the newsagent, and we are still struggling to reconcile the user expectation of a free and open web with the business requirement to generate revenue.

Improving monetisation through the web using built-in APIs such as the Payment Request API, Web Monetization API and even Brave Rewards should make it easier to monetize content without the need for intrusive third-party content. This change could see a drastic improvement in experience for the web users of 2020, especially on news and magazine websites.

People spend longer on fast websites, so this could be one of the elusive win-wins of web development.

Who to follow: @Coil & @Interledger.

7 - Honourable mentions

Deliberately missing from these predictions are Google's AMP, Facebook Instant Articles, Service Workers, Web Workers, Progressive Web Apps, Web Virtual Reality and AI. I don't think that these technologies are critical for shifting the needle on the median person's web experience, for a range of political and technical reasons. The closest on the list is Progressive Web Apps, as they may be the key to closing the gap between the web and native apps. Samsung recently announced support for including PWAs in their US App Store, although it does require you to email them your URL and sign some additional licenses. Google has an odd workaround where you can ship your website as an app in the Play Store, but the process is far from straightforward. Apple, unsurprisingly, is steadfastly protecting its App Store revenue (which is greater than its revenue from device sales) by barely entertaining the idea, although Safari does support some of the critical features. Unfortunately I don't think we will see a market shift in this respect in 2020.

There are, however, changes afoot which might mean a significant improvement to web UX in the EU. It looks like EU regulators have accepted that existing GDPR regulations have led to a web experience laden with oft-ignored (or ignorantly accepted) GDPR notices. These have become a blight on the web and do little to protect users - the average consent form takes 42 seconds to opt-out of non-essential cookies. If this consent management moves to the browser, a single native UI could be the only requirement for users to manage their consent, improving default security posture as well as the web experience. This is one of the options being considered for ePrivacy - a proposal for better regulations in the EU. At the speed of government though, I'm not sure we will see change formalised in 2020, let alone adopted and regulated.

The cookie provision, which has resulted in an overload of consent requests for internet users, will be streamlined. The new rule will be more user-friendly, as browser settings will provide for an easy way to accept or refuse tracking cookies and other identifiers. The proposal also clarifies that no consent is needed for non-privacy-intrusive cookies improving internet experience (like to remember shopping cart history) or cookies used by a website to count the number of visitors.

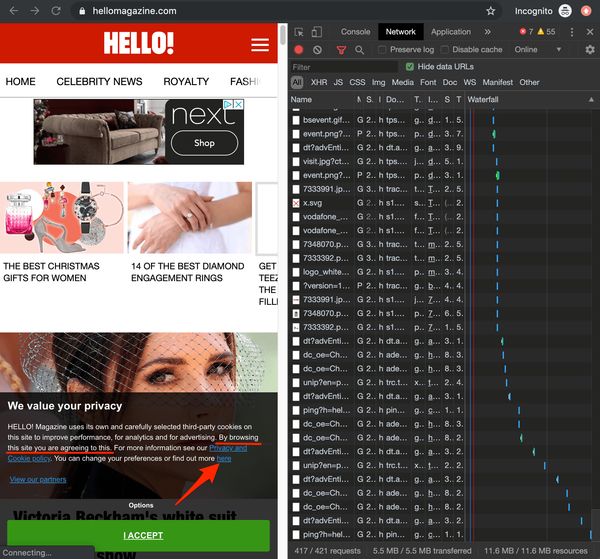

Explicit opt-out, or preferences managed in the browser, could dramatically improve the performance on many sites. Hello magazine is an example where over 11MB of content is loaded on the mobile first view, a significant amount of which is third-party. The opt-in to this is explicit: "By browsing this site you are agreeing to this", and the link to manage your preferences is inaccessible once you interact with the site.

If you are looking at the future of web performance, the most important thing you can do is to make your experiences fast now. The future is not bringing any silver bullets, which means it is more important than ever to measure and optimise your users' experience.

Further Reading

- Performance Metrics for Blazingly Fast Web Apps

- 5G Will Definitely Make the Web Slower, Maybe

- Speed tooling evolutions: 2019 and beyond [video:23min]

- GSMA Intelligence: Global Mobile Trends 2020 [pdf:3.5MB]

- GSMA Intelligence: The Mobile Economy 2019 [pdf:3.3MB]

- Comscore Global State of Mobile 2019 Whitepaper [registration required]

- All of the talks from Performance.Now() conference 2019 :)